Introduction

Stata 15 provides a convenient and elegant way of fitting Bayesian regression models by simply prefixing the estimation command with bayes. You can choose from 45 supported estimation commands. All of Stata’s existing Bayesian features are supported by the new bayes prefix. You can use default priors for model parameters or select from many prior distributions. I will demonstrate the use of the bayes prefix for fitting a Bayesian logistic regression model and explore the use of Cauchy priors (available as of the update on July 20, 2017) for regression coefficients. Read more…

Introduction

The Federal Reserve Economic Database (FRED), maintained by the Federal Reserve Bank of St. Louis, makes available hundreds of thousands of time-series measuring economic and social outcomes. The new Stata 15 command import fred imports data from this repository.

In this post, I show how to use import fred to import data from FRED. I also discuss some of the metadata that import fred provides that can be useful in data management. I then demonstrate how to use an advanced feature: importing multiple revisions of series whose observations are updated over time. Read more…

In the past, we’ve had users ask if Stata could import Twitter data. So we asked one of our interns, Dawson Deere (currently working on his computer science degree at Texas A&M University) to see if he could write a new command to do this. He used Stata 15’s improved Java plugins feature to write a new twitter2stata command. To install twitter2stata, type

ssc install twitter2stata, replace

Read more…

Introduction

Dynamic stochastic general equilibrium (DSGE) models are used in macroeconomics to model the joint behavior of aggregate time series like inflation, interest rates, and unemployment. They are used to analyze policy, for example, to answer the question, “What is the effect of a surprise rise in interest rates on inflation and output?” To answer that question we need a model of the relationship among interest rates, inflation, and output. DSGE models are distinguished from other models of multiple time series by their close connection to economic theory. Macroeconomic theories consist of systems of equations that are derived from models of the decisions of households, firms, policymakers, and other agents. These equations form the DSGE model. Because the DSGE model is derived from theory, its parameters can be interpreted directly in terms of the theory.

In this post, I build a small DSGE model that is similar to models used for monetary policy analysis. I show how to estimate the parameters of this model using the new dsge command in Stata 15. I then shock the model with a contraction in monetary policy and graph the response of model variables to the shock. Read more…

Initial thoughts

Nonparametric regression is similar to linear regression, Poisson regression, and logit or probit regression; it predicts a mean of an outcome for a set of covariates. If you work with the parametric models mentioned above or other models that predict means, you already understand nonparametric regression and can work with it.

The main difference between parametric and nonparametric models is the assumptions about the functional form of the mean conditional on the covariates. Parametric models assume the mean is a known function of \(\mathbf{x}\beta\). Nonparametric regression makes no assumptions about the functional form.

In practice, this means that nonparametric regression yields consistent estimates of the mean function that are robust to functional form misspecification. But we do not need to stop there. With npregress, introduced in Stata 15, we may obtain estimates of how the mean changes when we change discrete or continuous covariates, and we can use margins to answer other questions about the mean function.

Below I illustrate how to use npregress and how to interpret its results. As you will see, the results are interpreted in the same way you would interpret the results of a parametric model using margins. Read more…

We announced Stata 15 today. It’s a big deal because this is Stata’s biggest release ever.

I posted to Statalist this morning and listed sixteen of the most important new features. Here on the blog I will say more about them, and you can learn even more by visiting our website and seeing the Stata 15 features page.

I go into depth below on the sixteen highlighted features. They are (click to jump)

Read more…

In my last post, I demonstrated how to use putexcel to recreate common Stata output in Microsoft Excel. Today I want to show you how to create custom reports for arbitrary variables. I am going to create tables that combine cell counts with row percentages, and means with standard deviations. But you could modify the examples below to include column percentages, percentiles, standard errors, confidence intervals or any statistic. I am also going to pass the variable names into my programs using local macros. This will allow me to create the same report for arbitrary variables by simply assigning new variable names to the macros. You could extend this idea by creating a do-file for each report and passing the variable names into the do-files. This is another important step toward our goal of automating the creation of reports in Excel.

Today’s blog post is Read more…

Initial thoughts

Estimating causal relationships from data is one of the fundamental endeavors of researchers, but causality is elusive. In the presence of omitted confounders, endogeneity, omitted variables, or a misspecified model, estimates of predicted values and effects of interest are inconsistent; causality is obscured.

A controlled experiment to estimate causal relations is an alternative. Yet conducting a controlled experiment may be infeasible. Policy makers cannot randomize taxation, for example. In the absence of experimental data, an option is to use instrumental variables or a control function approach.

Stata has many built-in estimators to implement these potential solutions and tools to construct estimators for situations that are not covered by built-in estimators. Below I illustrate both possibilities for a linear model and, in a later post, will talk about nonlinear models. Read more…

In my last post, I showed how to use putexcel to write simple expressions to Microsoft Excel and format the resulting text and cells. Today, I want to show you how to write more complex expressions such as macros, graphs, and matrices. I will even show you how to write formulas to Excel to create calculated cells. These are important steps toward our goal of automating the creation of reports in Excel.

Before we begin the examples, Read more…

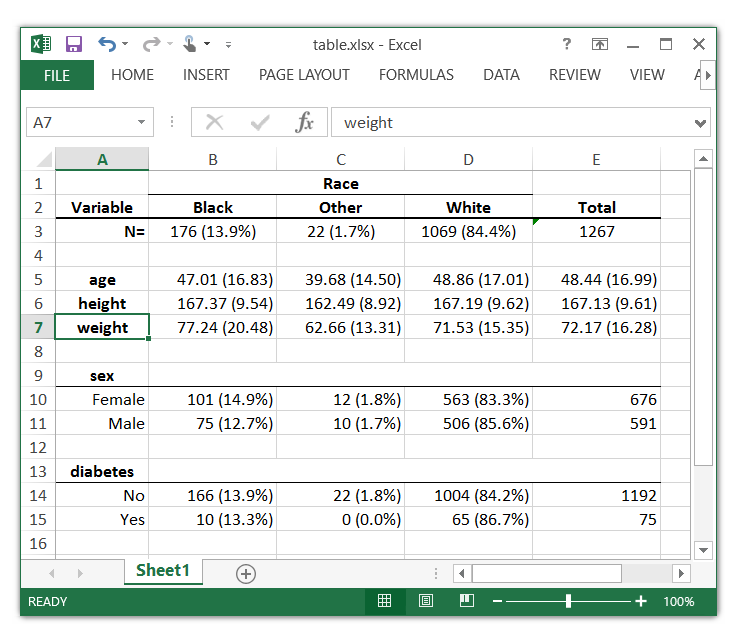

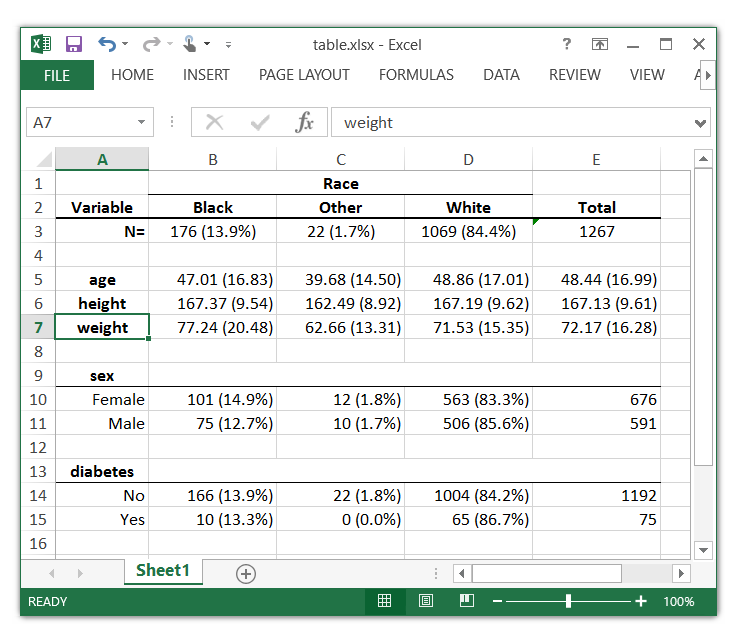

For a long time, I have wanted to type a Stata command like this,

. ExcelTable race, cont(age height weight) cat(sex diabetes)

The Excel table table.xlsx was created successfully

and get an Excel table that looks like this:

So I wrote a program called ExcelTable for my own use Read more…